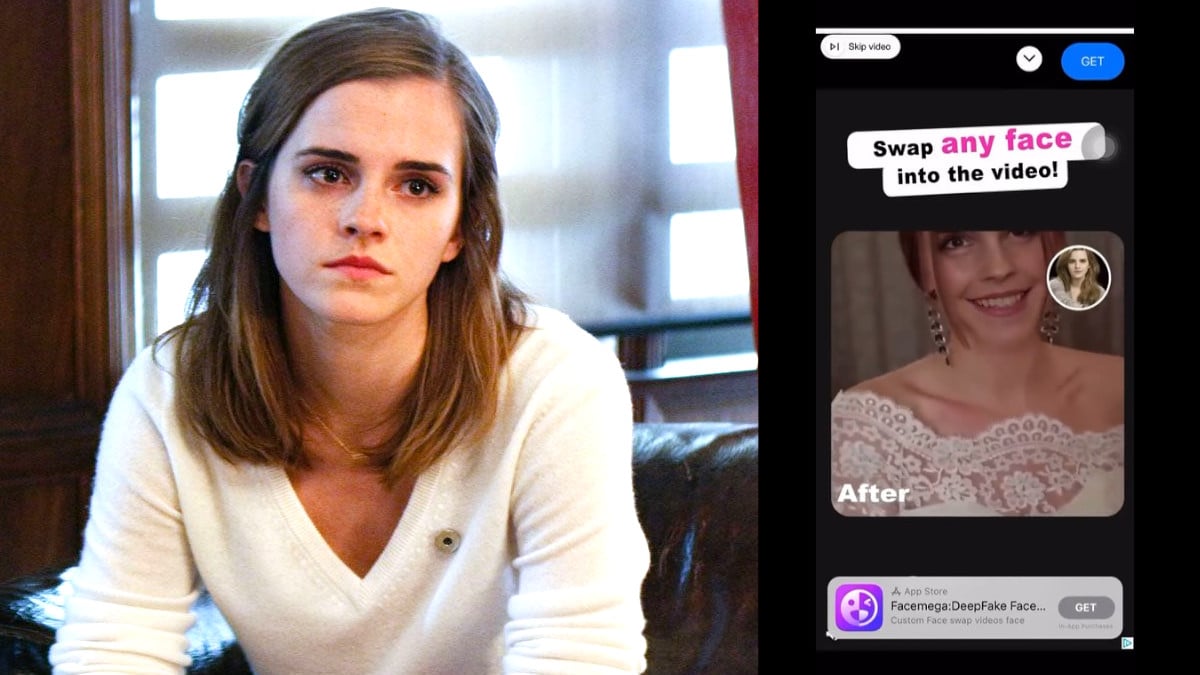

Beginning on March 5, 2023, many social media and app users were shocked when they came across advertisements for Facemega, a “DeepFake Face Swapping” app. The deepfake advertisement seemingly shows famous actresses, such as Emma Watson, smiling seductively at the camera and moving into a sexually suggestive position. While nothing explicit is shown in the seconds-long advertisement, the strongly suggestive clip could have easily been the opening of a pornographic video.

Of course, the woman in the video is not Watson. This also isn’t the first time that the actress has been a victim of deepfake content that utilizes her likeness for sexual material without her consent. For those unfamiliar with deepfake, it is a form of video/image manipulation that uses artificial intelligence (AI) technology to alter the face or body of an individual convincingly. A popular example of a deepfake is young Luke Skywalker’s (Mark Hamill) cameo in The Mandalorian, which Disney created predominantly through deepfakes with a little CGI.

However, deepfake isn’t just used in the film industry but has also been widely used on the internet for malicious purposes. Even though the technique has existed in some form since the 1990s, the term deepfake was actually first coined in 2017 by a Redditor who used the technology to create pornographic videos. The utilization of deepfake for adult material has become increasingly concerning as nearly anyone’s face can be superimposed into graphic content and circulated on the internet without their consent.

App stores offer deepfake tools that can be used for harassment

Amid rising concerns over the malicious use of deepfakes, many are questioning why app stores are offering easy access to these tools. To make matters worse, some apps are rolling out advertisements that actually encourage the malicious use of their tools. Twitter user and independent journalist @laurenbarton03 was so shocked when she came across a sexual deepfake ad using Watson’s likeness, that she screengrabbed it. The user explained that she had just been using a sticker app called Background Eraser ~ Stickers when the ad popped up.

However, this wasn’t just a one-time chance occurrence. In addition to popping up in certain apps, NBC News reported that 230 of these ads were spread across social media platforms, including Facebook, Instagram, and Messenger. Watson and Scarlett Johansson were both victims of the deepfake app’s ads, though Watson’s likeness was used for the majority of the ads. Meta has not publicly addressed the ads, but they were removed from the platform by March 7. Additionally, the Megaface app is no longer available on the App store. Before it was taken down, though, the App advertised that it was for users 9+.

The advertisements quickly sparked backlash from social media users. NBC News reporter, Kat Tenbarge, pointed out the app was making it easier for women to be sexually harassed, humiliated, and discriminated against.

Even though these women are victims and are being used in sexual content nonconsensually, there is the likelihood that they will still be discriminated against for it. These victims could lose their jobs, face the wrath of an abusive partner, or be subjected to all forms of cyberbullying, harassment, and stalking. Deepfake technology can easily ruin someone’s life in a matter of minutes. So, why are apps still making these tools available? In some cases, these deepfake and face-swapping apps are free. For Megaface, the cost was just $8 per month and, although the terms of service claimed that users could not “impersonate any person” or submit content that was “sexually explicit,” it is unclear how well these rules are enforced, especially when the app’s advertisements do not follow them.

Emma Watson and Scarlett Johansson have been deepfake targets before

Sadly, both Watson and Johansson have been victims of deepfake videos in the past. While Johansson has not responded to Megaface’s advertisements, she did open up on deepfake porn back in 2019. In her statement, she called trying to fight against deepfake porn “useless.” She mentioned how copyright laws and the rights to one’s own image vary from country to country. As a result, even if one succeeds in taking down a site or image in the U.S., they aren’t guaranteed that outcome for the rest of the world that the internet extends to. Due to the “lawlessness” of the internet, even those with more resources than the average person find it futile to combat deepfake porn.

Four years later, Johansson’s remarks remain relevant as deepfake continues to enjoy the lawlessness of the internet. Earlier this year, streamer QTCinderella became a victim of deepfake porn after Twitch streamer Atrioc revealed the graphic images of her and other female streamers. She initially vowed legal action, but weeks later revealed that the legal system made it nearly impossible for her to sue the creators of the videos. This is because, while nearly every state has laws making it illegal to share sexual content of an individual without consent, almost none of these states’ laws extend to deepfake images.

As the years go by, the situation only becomes more and more frustrating. Years after this first started becoming a problem, the legal system still promises little-to-no protection for victims of deepfake porn. Additionally, the Megaface advertisements and app show that this technology is only becoming more readily available to the population. Apps are now providing the tools at minimal costs in a format that can be used by nearly anyone, not just those with technological experience. While Meta, Google, and Apple have tried to take action against AI-generated adult content and apps advertising deepfake porn creation, their efforts still aren’t enough to catch every violation of their rules. Additionally, in the time it takes them to catch perpetrators like Megaface, hundreds of ads can already have been circulated widely over the internet and have life-altering consequences for victims.

(featured image: STXfilms / Megaface)

Published: Mar 9, 2023 06:01 am